When it comes to running display advertising on the web, there are finite publishers, but hundreds of display ad networks who promise to run your banner ads with the best chance of conversion, the hottest technology and the most efficient algorithm.

Whether you call them ad exchanges or programmatic display advertising, there is one key question that plagues digital marketing managers who hear ad network reps beating the drum in oneRead more…

Continue reading >

When it comes to running display advertising on the web, there are finite publishers, but hundreds of display ad networks who promise to run your banner ads with the best chance of conversion, the hottest technology and the most efficient algorithm.

Whether you call them ad exchanges or programmatic display advertising, there is one key question that plagues digital marketing managers who hear ad network reps beating the drum in one ear and upper management expecting measurable results in the other. That question is: does display advertising really work?

By that, I mean, is there tangible, incremental conversion lift coming from the display ads you run with Rocket Fuel, Quantcast, Chango and the like?

This post attempts to unpack how success is measured, what red herrings often crop up and how you can really get to the bottom of display advertising’s contribution to your company’s top line.

Defining the jargon in display

Clicks-through and view-through conversions, multi-touch attribution — what does it all mean?

Let’s quickly define our terms.

- Your customer sees a display ad or banner ad. S/he clicks on it and converts. That’s a simple click-through conversion.

- Your customer visits a page where your display ad is shown, but does not click on it. Later, s/he converts. That’s a view-through conversion.

- Because view-throughs are measured based on whether the ad loaded on your customer’s computer, we don’t really know if your customer saw the ad, consciously or subliminally. It might’ve loaded at the bottom of the site, while s/he never scrolled that far. To get at this calamity, there’s a metric called viewability that some tools attempt to measure. It answers the question: was your ad actually viewed? Or did it just load somewhere on the page, falling on deaf ears, so to speak?

- The traditional conversion path used to be measured against simple click-through conversions. Whichever ad or channel had the last touch, or the last click, that led to your customer converting — that was the channel that got credit. That’s last-touch attribution.

- The new(er) hypothesis about complex advertising programs that touch a customer at many different moments on the way to a conversion — let’s say your customer saw a banner ad but didn’t click, then Googled your brand name, then searched for coupons, and finally converted on a newsletter click — the perspective that considers all of these touches and gives some equity to all of them is called multi-touch attribution.

So what’s the right way to understand whether display ads are working? Should we spend thousands of dollars to implement multi-touch attribution technology, or apply Draconian rules that only reward channels for closing the deal with a customer on the final click?

It’s a confusing problem. But you have options to measure what’s going on in your conversion funnel.

Here are some things you can do to truly measure the impact of display.

A/B test your ads against a placebo

There is something called a Public Service Announcement (PSA) test. In this experiment, you serve your display ads to 50% of your audience — business as usual — but the other half of your audience is shown a placebo. This half sees a PSA. One former client, for example, used a “Smokey the Bear” ad about preventing forest fires.

This type of test can take some time to gather meaningful data — it can take a month or more and be quite costly — but the results will demonstrate whether the potential customers who saw your real display ads have a higher propensity to convert than those who saw Smokey the Bear.

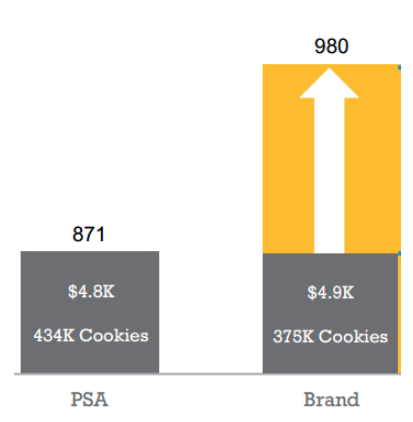

Consider this example of a real PSA A/B test we ran on behalf of a client in the home decor e-commerce sector.

Here’s what we uncovered by running this PSA test with Quantcast, an advertising partner:

The “Brand” group of ads, meaning the client’s regular ads, yielded 980 conversions (these are view-throughs) while prospects who saw Smokey the Bear ads converted 871 times.

When you factor in the number of cookies — in other words, how many times each ad was shown — the regular ads brought in 30% more conversions than Smokey the Bear. For you marketing statisticians, the confidence level here was 95%. You can find more info on statistical significance and what it means for marketers here.

Is this a good result? On the one hand, it’s nice to see 30% incremental conversions. On the other hand, what should we make of the fact that almost 900 people saw Smokey the Bear and converted anyway? Is the handful of incremental conversions enough to justify the display ad spend here?

For this particular client, it wasn’t.

We didn’t only test IAB-standard display ads as shown above. We also tested ads on the Facebook Exchange. There, we found no statistically significant conversion difference between the client’s real ads and PSAs.

This type of testing is one way to assess value. Advanced attribution tools are another.

Advanced attribution technology

If you’re touching prospects on many levels and via multiple channels, some experts argue you’ll benefit from an advanced attribution platform. This technology takes into account all the touches that contribute to a single conversion and algorithmically estimate how each channel fits into the larger whole.

Convertro is one such tool. It’s useful for estimating how many real conversions a given channel is contributing based on all the (possibly hundreds) of touchpoints in the sales cycle.

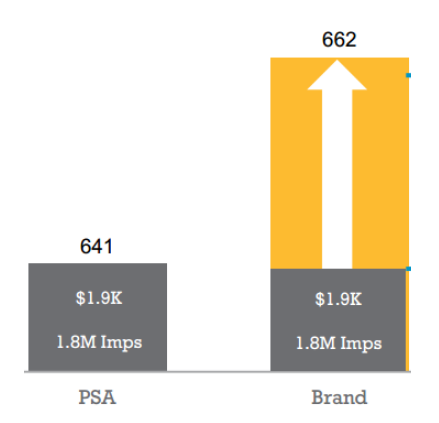

It can also show you data like this:

What we found interesting was the tool’s ability to show how often a given ad network played the role of Introducer (meaning that the ad network served the very first ad this prospect saw in your sales funnel), Closer (the ad network showed the last ad viewed or clicked by the prospect before converting), or Influencer (the ad network participated somewhere between introduction and conversion).

This breakdown can be useful in thinking about how important each ad partner is when it comes to guiding prospects to eventually buy.

The table above tells us that the ad networks pictured here acted as an influencer 97% of the time. That means they weren’t likely to be sourcing brand new prospects or “closing the deal” with soon-to-be customers, but probably contributed awareness along the way.

Last-click attribution

The conservative play when it comes to evaluating many different channels is to give maximum weight to last-click conversions and revenue.

That’s because only one lucky ad or channel can be responsive for the final click or view before a conversion. If you credit each channel with view-throughs, you might be praising multiple channels for the same conversion. But using a tool like Google Analytics to report on conversions and revenue from a given traffic source will, in the traditional view, tell you which channel drove the last click. Revenue resulting from each channel will be counted exactly once.

Some experts argue that last-click attribution has gone out of style and a more holistic view is in order for how prospects interact with your business on their journey through the conversion funnel. It’s true that tracking ROI has become more complex with the advent of drip campaigns, inbound marketing and many kinds of interactive ads. Still, a more rigid reading of the analytics can offer checks and balances against overspending.

The best thing you can do for your business is to look at attribution in more than one way. If you can afford a PSA A/B test, it’s quite informative (and in our example case, quite sobering). Otherwise, assessing ad network performance via multiple free tools will give you more insight than simply trusting the dashboard of your ad partner(s). With several perspectives at your disposal, it’s easier to make a call on which channels should be expanded and which can be cut.

...Read less